When the model is processing the word “it”, self-attention allows it to associate “it” with “animal”.Īs the model processes each word (each position in the input sequence), self attention allows it to look at other positions in the input sequence for clues that can help lead to a better encoding for this word. What does “it” in this sentence refer to? Is it referring to the street or to the animal? It’s a simple question to a human, but not as simple to an algorithm. ” The animal didn't cross the street because it was too tired” Say the following sentence is an input sentence we want to translate: I had personally never came across the concept until reading the Attention is All You Need paper. Then, they each pass through a feed-forward neural network - the exact same network with each vector flowing through it separately.ĭon’t be fooled by me throwing around the word “self-attention” like it’s a concept everyone should be familiar with. The word at each position passes through a self-attention process. In this post, we will attempt to oversimplify things a bit and introduce the concepts one by one to hopefully make it easier to understand to people without in-depth knowledge of the subject matter.Ģ020 Update: I’ve created a “Narrated Transformer” video which is a gentler approach to the topic: Harvard’s NLP group created a guide annotating the paper with PyTorch implementation. A TensorFlow implementation of it is available as a part of the Tensor2Tensor package. The Transformer was proposed in the paper Attention is All You Need. So let’s try to break the model apart and look at how it functions.

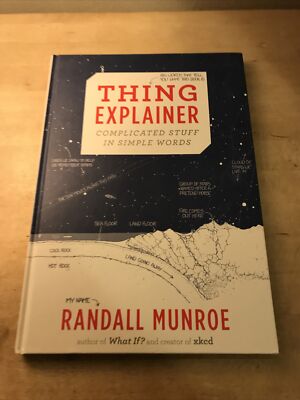

It is in fact Google Cloud’s recommendation to use The Transformer as a reference model to use their Cloud TPU offering. The biggest benefit, however, comes from how The Transformer lends itself to parallelization. The Transformer outperforms the Google Neural Machine Translation model in specific tasks. In this post, we will look at The Transformer – a model that uses attention to boost the speed with which these models can be trained. Attention is a concept that helped improve the performance of neural machine translation applications. In the previous post, we looked at Attention – a ubiquitous method in modern deep learning models. Watch: MIT’s Deep Learning State of the Art lecture referencing this post Translations: Arabic, Chinese (Simplified) 1, Chinese (Simplified) 2, French 1, French 2, Japanese, Korean, Russian, Spanish, Vietnamese I gave up early on, but was pleased to discover it wouldn’t be as difficult as expected, because Munroe provides a “simplewriter” on his website.Hacker News (65 points, 4 comments), Reddit r/MachineLearning (29 points, 3 comments) (If you can pry it out of their hands.) And young inquiring minds can get lost in it… in a good way.Ī friend of mine who matches the above description of the forty-something engineer challenged me to write this review using Munroe’s list of 1000 words. Forty-something engineers who grew up studying David Macauley’s Castle before graduating to his The Way Things Work, or those who loved learning about the anatomy of a city in Kate Ascher’s The Work will painstakingly devour it page by page before giving it to their offspring. The book is recommended for ages 5 to 105, and I think that’s pretty spot-on. Munroe uses blueprint drawings to explain 45 complicated natural phenomena, like the animal cell, tectonic plates and the night sky, as well as works of human ingenuity, like the inner-workings of a padlock, a nuclear reactor and a cockpit. Constitution and the USS Constitution (and how each works), and introduces us to the Large Hadron Collider (the big tiny-thing hitter). Using these simple words and line drawings, Munroe breaks down the periodic table, explains the difference between the U.S.

(This list of words is included in the book, but “thousand” must not be among them, because the list is titled “The Ten Hundred Words People Use Most.”) In fact, throughout the entire book, Munroe limited his word usage to 1000 of the most commonly used words in our language. In Thing Explainer: Complicated Stuff in Simple Words, he has done just what the title promises: He has taken sophisticated works and broken them down to simple illustrations and words. The creator of xkcd and author of What If?: Serious Scientific Answers to Absurd Hypothetical Questions has a new book coming out in a week or so.

0 kommentar(er)

0 kommentar(er)